We are a couple of months post-launch with Glean and are focused on creating truly useful and reliable agents across the company. We rely heavily on the Agent Builder to optimize our agents. I really like the recently added Debug Mode, which provides much more visibility into agent runs as part of the Preview experience, and I also appreciate the addition of formal versioning.

That said, I still find it inefficient to systematically evaluate and optimize our Glean agents. This creates a slow feedback loop when verifying whether changes actually improve agent performance, which in turn makes it harder to iterate quickly. As a result, many changes end up being made without proper isolated testing.

Based on our experience building agents in other platforms like LangGraph (using LangSmith for observability and evaluation) and Intercom (our AI-first Support System with Fin AI training and evaluation tools built in), it would be great if the Agent Builder supported a more systematic evaluation approach.

Ideal experience might include:

- Define a dataset of agent prompts that the agent should be able to handle

- Run those prompts in bulk

- Manually score/grade the outputs (e.g. bad, acceptable, good) while being able to trace through the agent run (similar to Debug Mode)

- Track previous experiments, including all runs, scores, and access to traces

- [Nice to have / future] LLM-based judging of outputs

As a workaround: is it possible to add observability via a platform like LangSmith to a Glean agent? For example, could we trace Glean agent runs using LangSmith and automate this type of systematic evaluation?

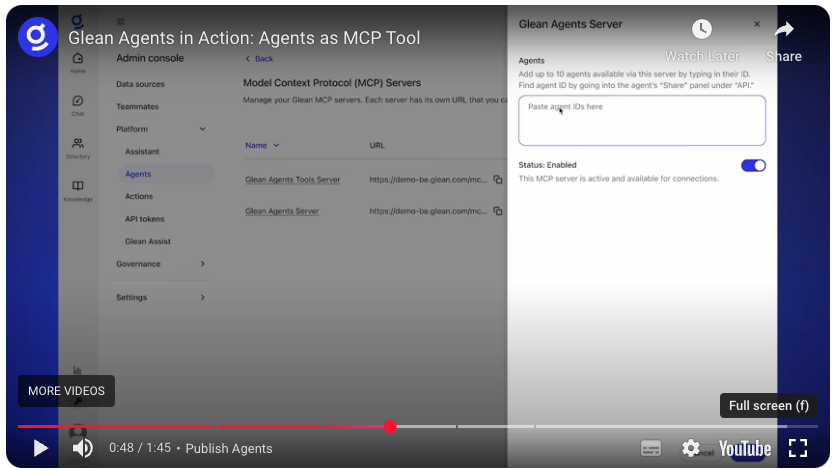

Not sure if MCP could unlock this possibility as implied here - we're playing with the recently opened Remote MCP beta in a couple of use cases, but I don't believe we can interface with specific Glean agents via MCP, nor would it provide the detailed traces that might be needed to diagnose bad responses.